portfolio

In God we trust, all others bring data. - William Edwards Deming (1900-1993)

Publications

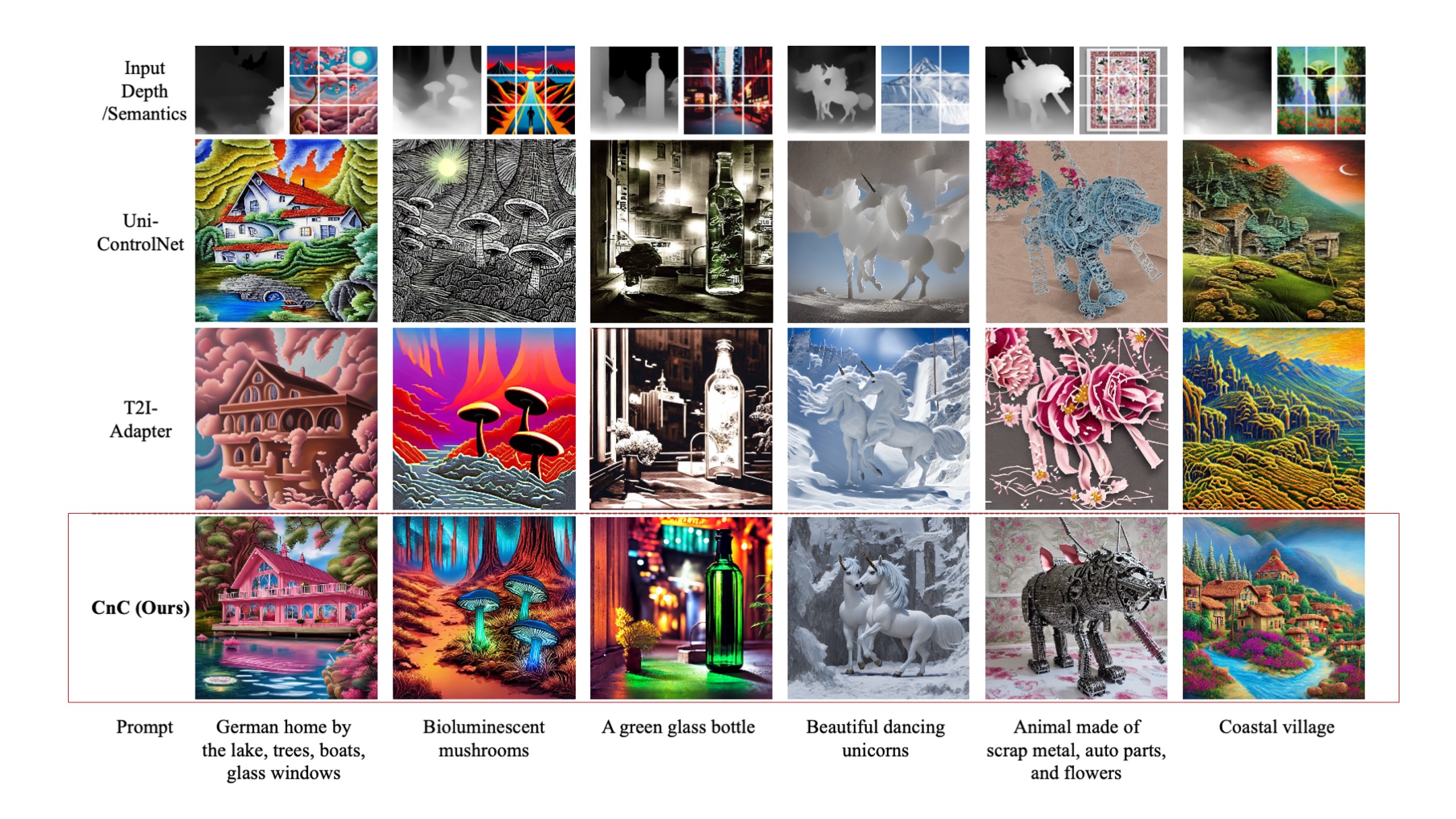

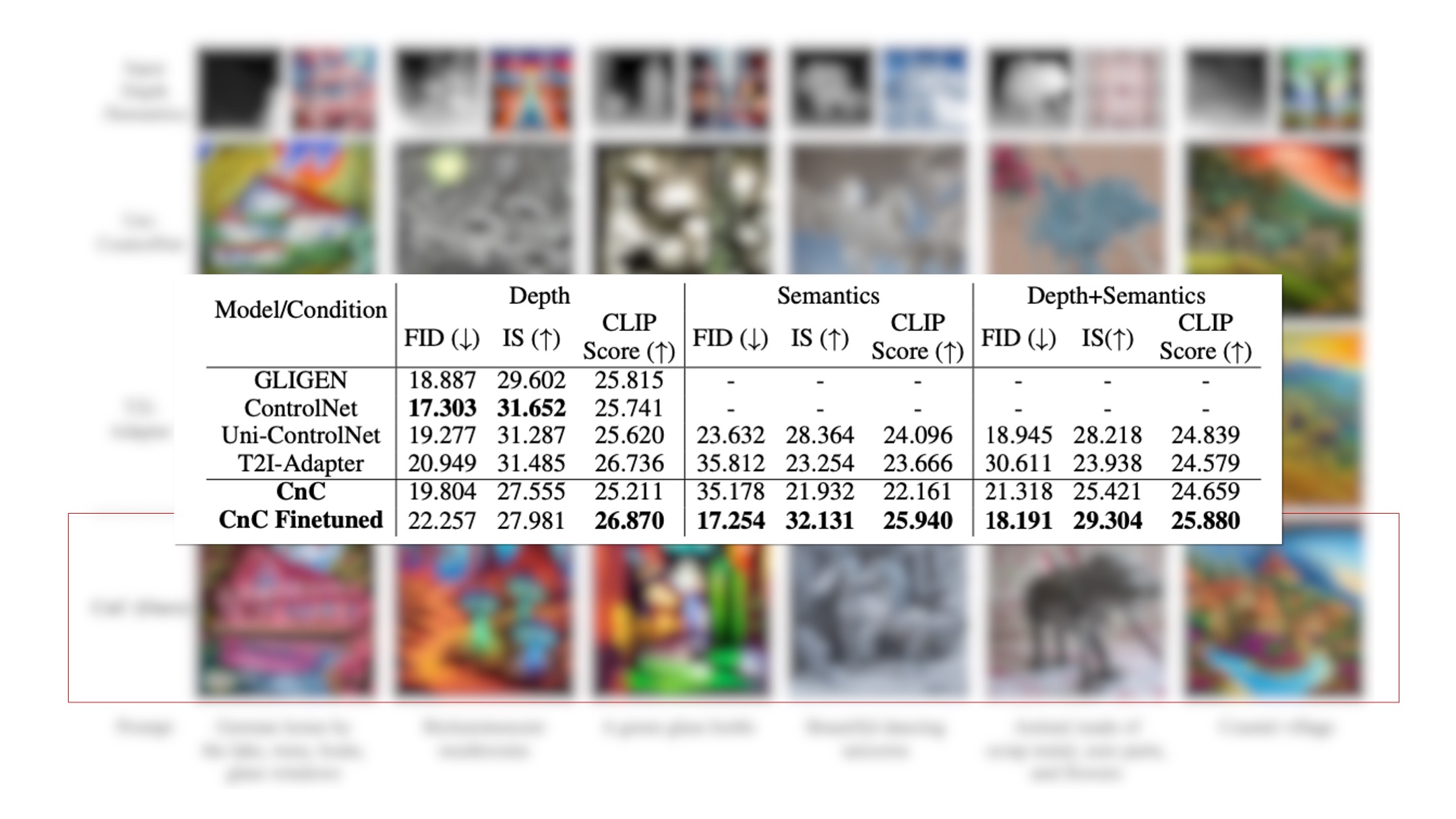

1. [ICLR 2024] Compose and Conquer: Diffusion-Based 3D Depth Aware Composable Image Synthesis

First Author, accepted to ICLR 2024 - 2024.01.16

Conditional diffusion models usually take in text, and two different types of conditions to generate an image.

- Local conditions, which conditions the model on structural information through primitives like depth maps and canny edges.

- Global conditions, which conditions the model on semantic information (color, identity, texture, etc).

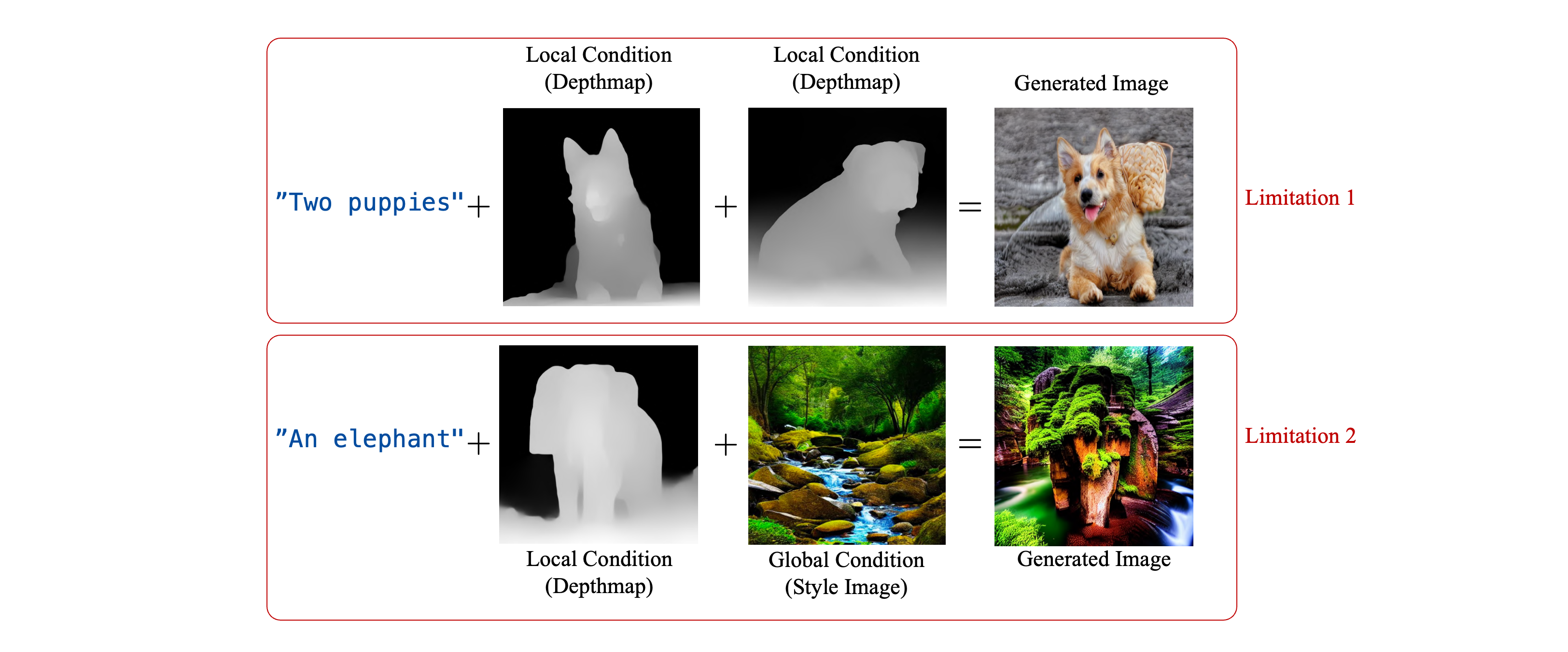

However, these models suffer from two limitations: they lack the ability to disentangle the relative depth of multiple local conditions, and are unable to localize global conditions to a specific area.

- Limitation 1: Suppose you’d like to generate an image of two puppies with one in front of another that have the shape of the depthmaps. Models aren’t able to distinguish that one puppy has to be placed in front of the other, resulting in a fused image.

- Limitation 2: How about an elephant standing in a forest, that shares the shape (depthmap) and semantic of the forest image? Models can’t tell that the image semantics are supposed to go behind (or around) the elephant, resulting in the elephant being completely ignored.

We sought to create a model that was able to 1. Distinguish between where objects should be placed in relative depths, and 2. Localize global semantics onto a user-defined area, not just the whole image.

We solved this through proposing a training paradigm and a novel inference algorithm: Depth Disentanglement Training and Soft Guidance.

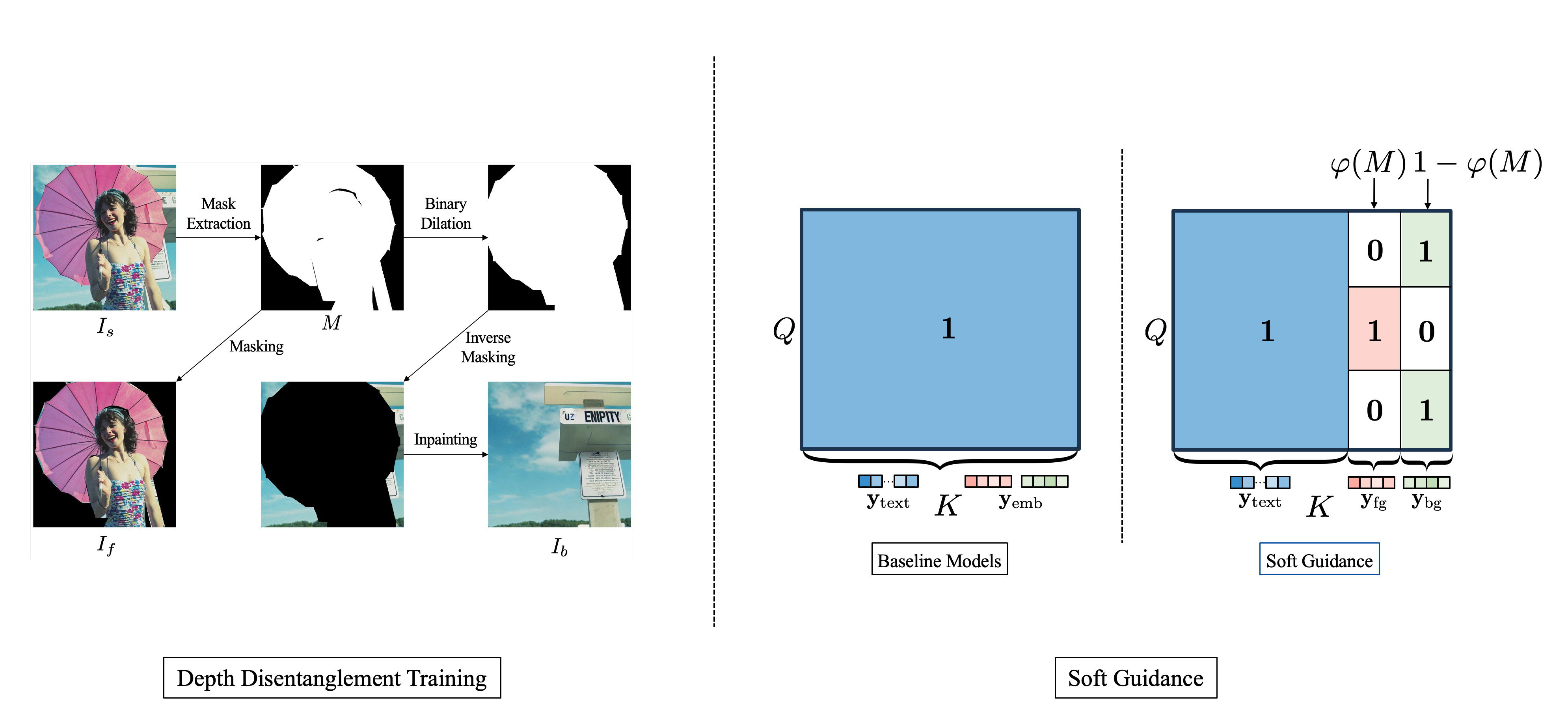

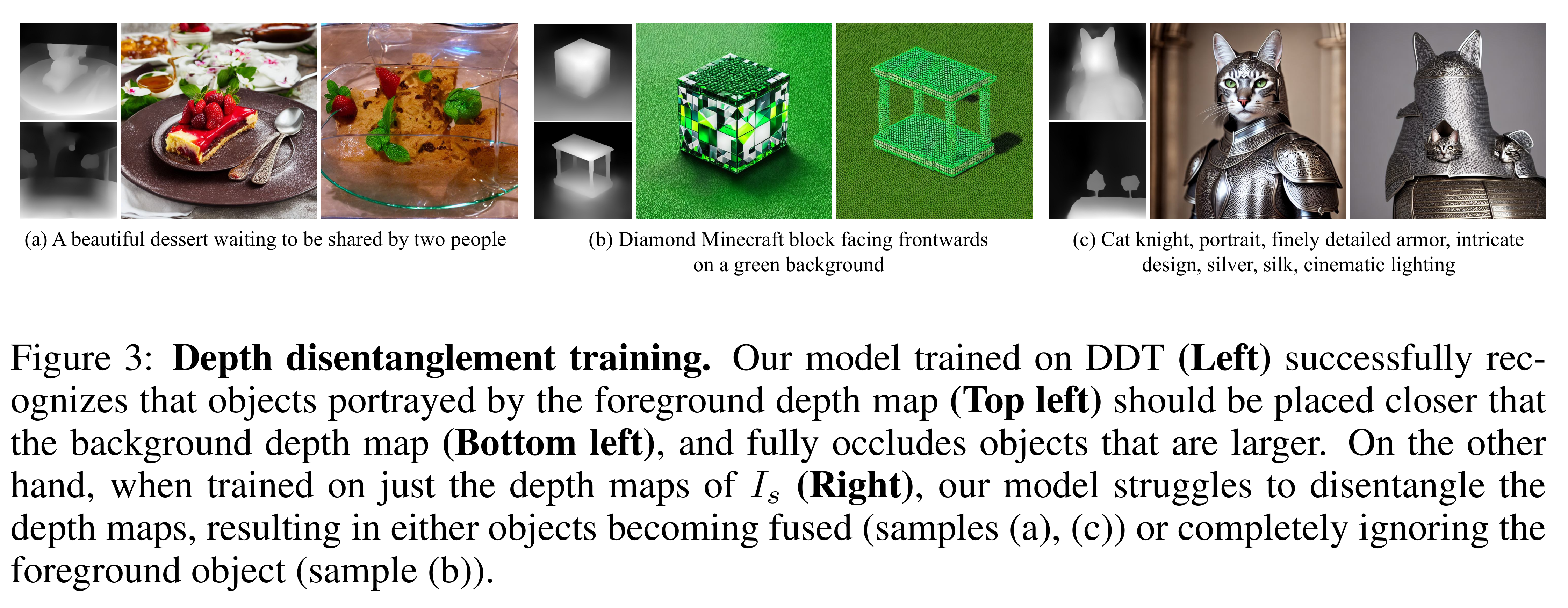

Depth Disentanglement Training parses the training data into three sub-components: the foreground, background, and mask via inpainting. The background image serves as structural information that’s initially occluded by the salient object, forcing the model to learn what’s behind another object.

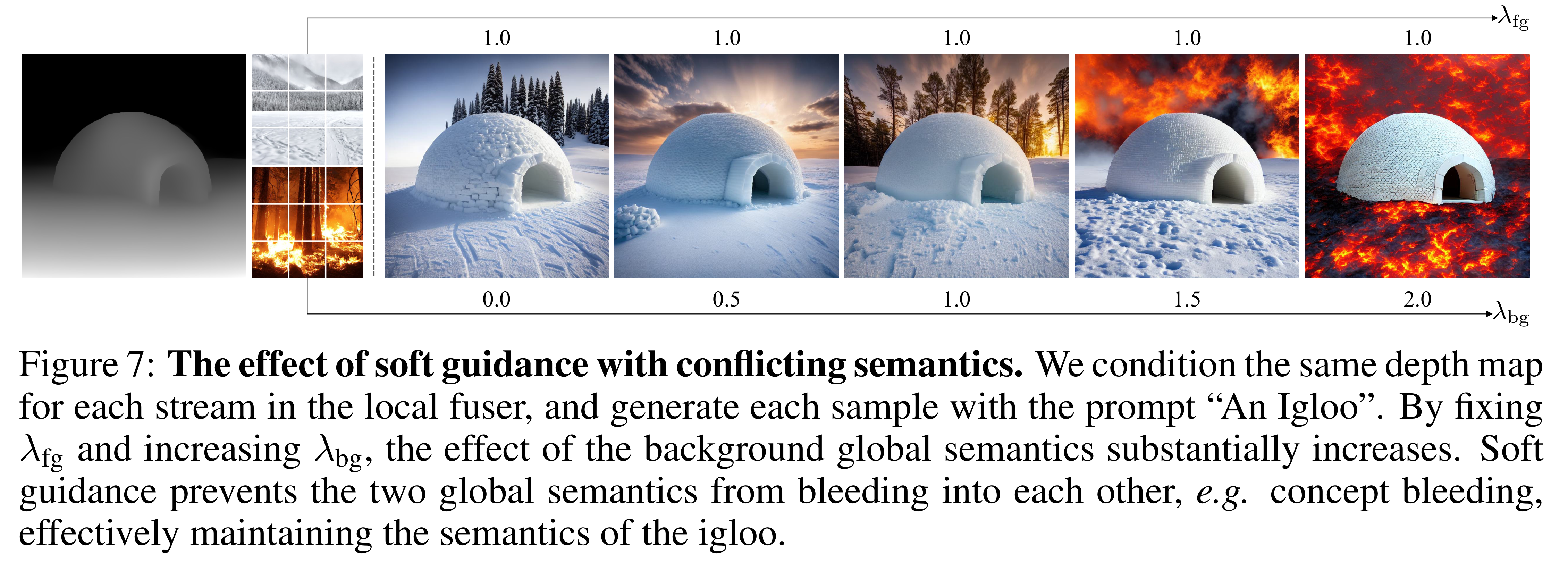

Soft Guidance partially masks out the pixels that are attending to certain semantics in the cross-attention layers of the model, forcing the model to attend to parts of the pixels that correspond to their semantic counterparts.

By training our model Compose and Conquer (CnC) with Depth Disentanglement Training and Soft Guidance, we’ve created a model that outperforms current conditional-generation SOTA models both qualitatively and quantitatively!

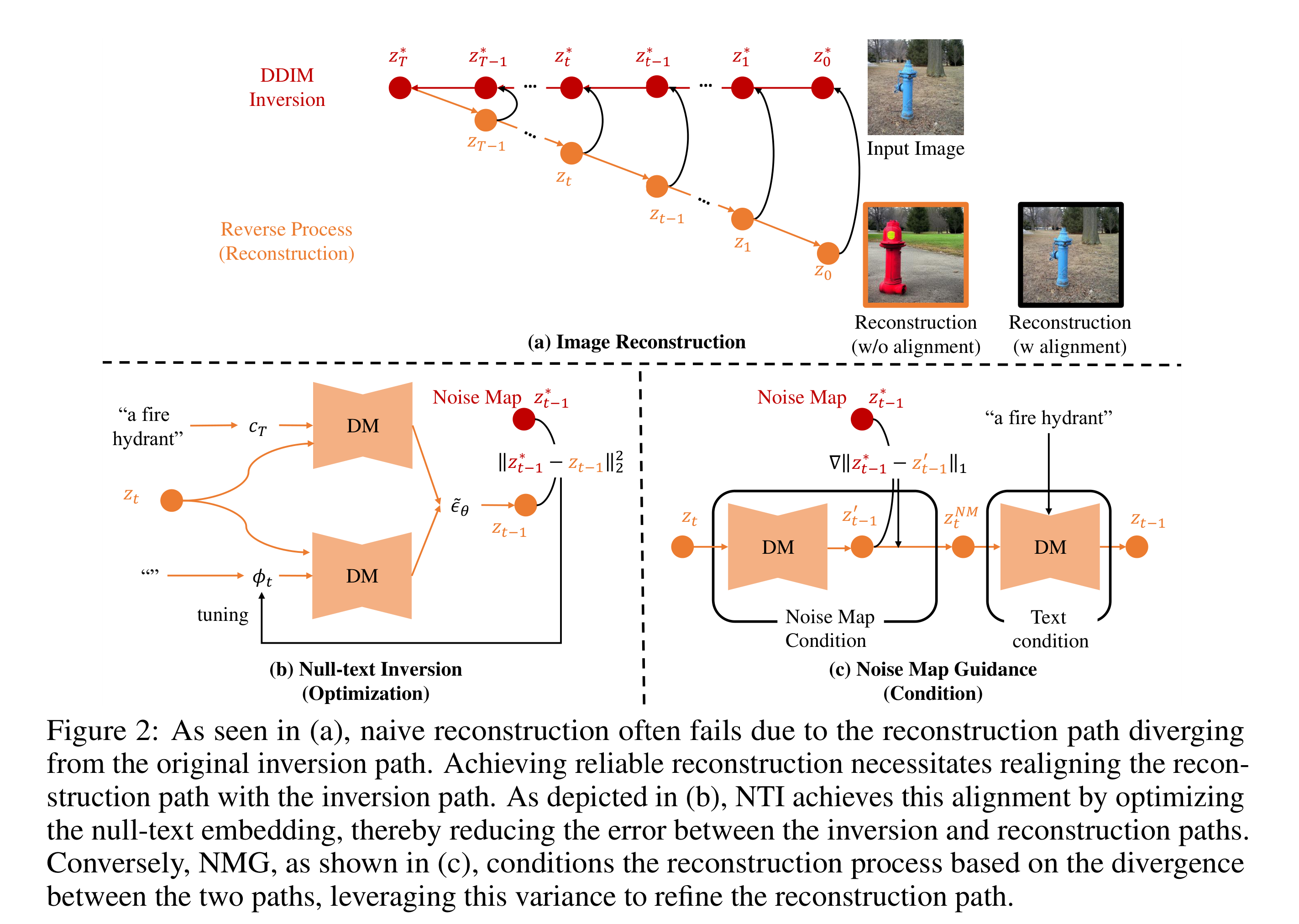

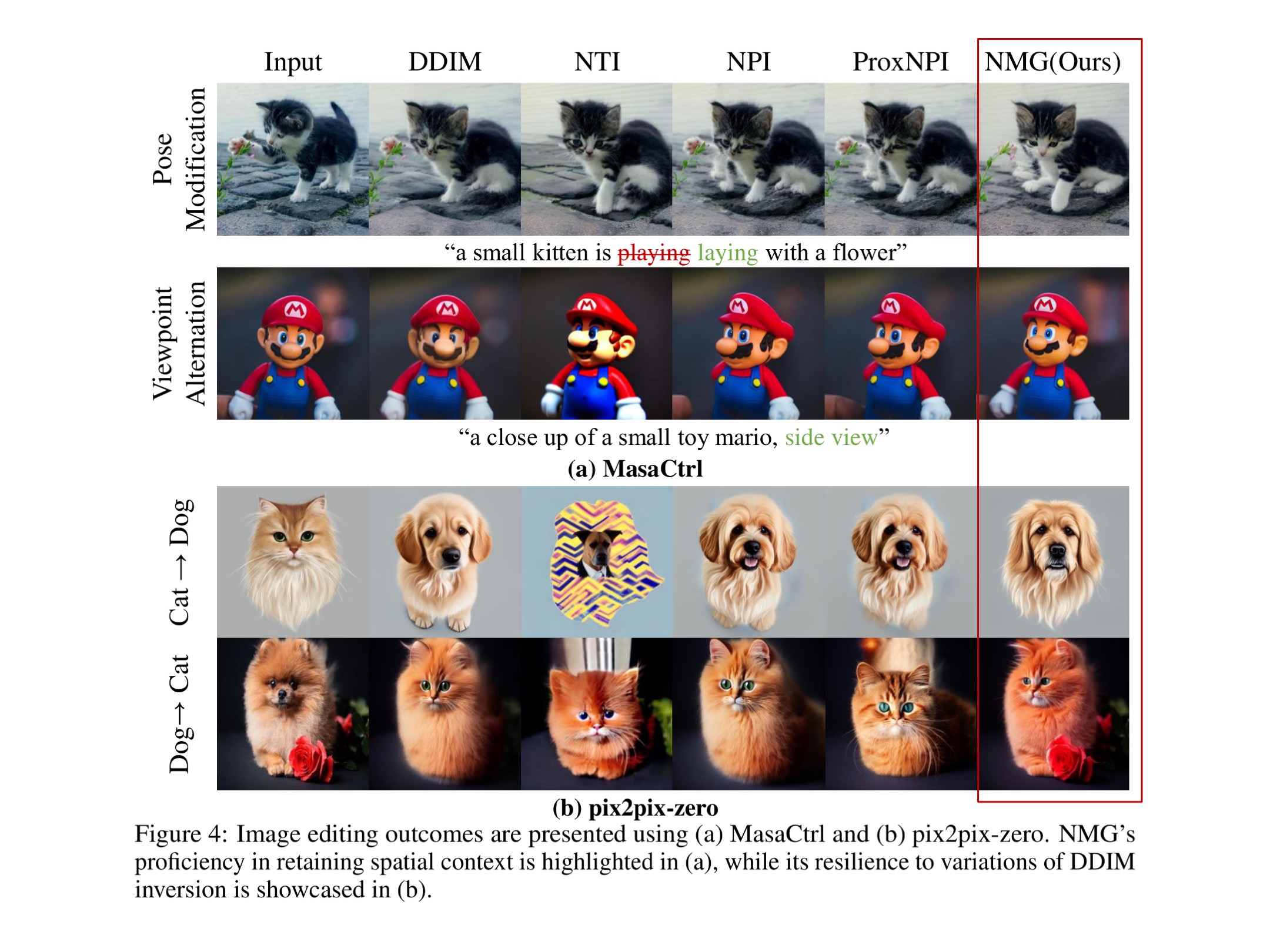

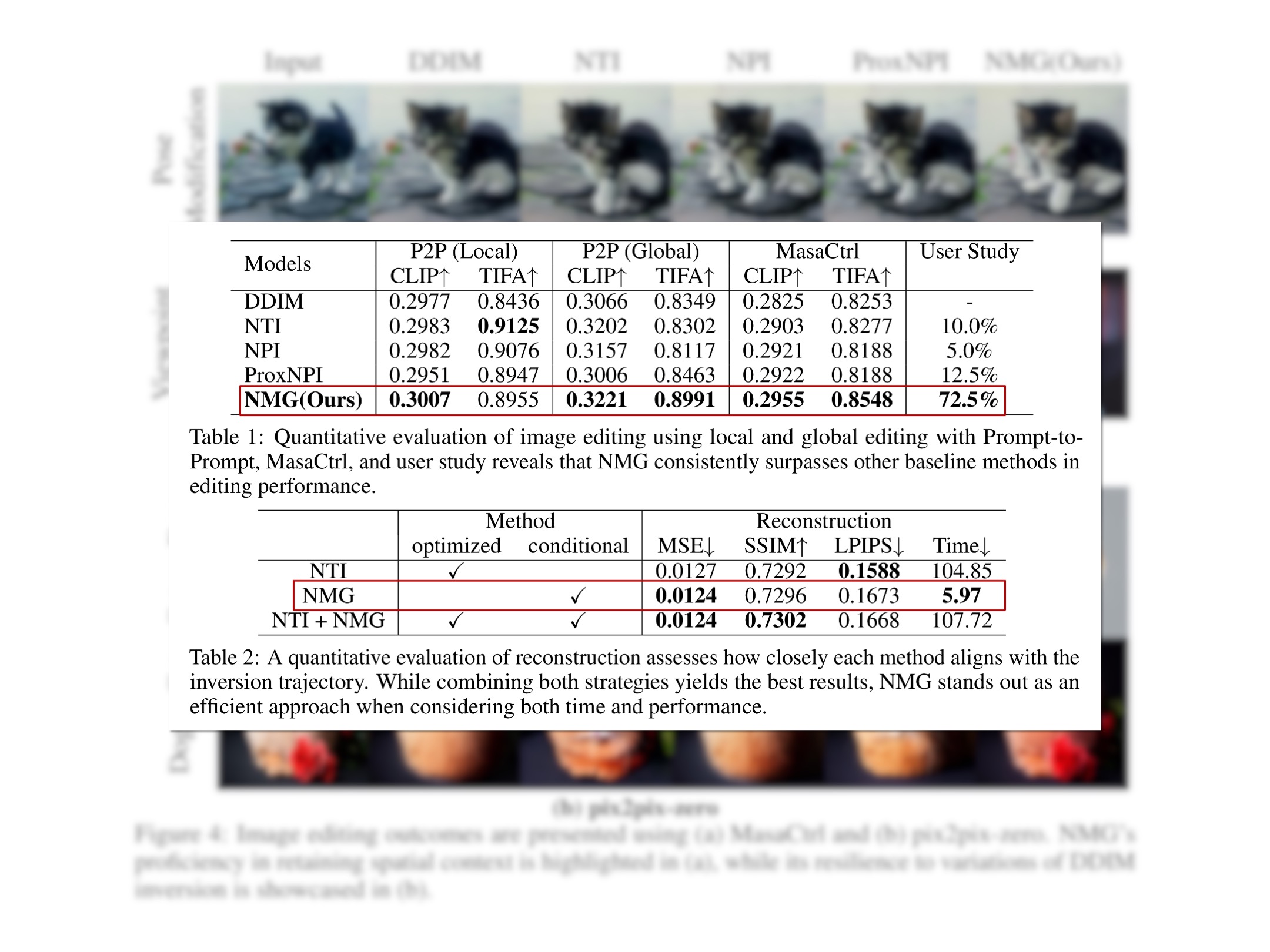

2. [ICLR 2024] Noise Map Guidance: Inversion with Spatial Context for Real Image Editing

Second Author, accepted to ICLR 2024 - 2024.01.16

Diffusion models are also referred to as Score-Based Models, due to its recent findings of how they’re also able to predict the score of random variables. These predicted scores can be utilized directly into a PF-ODE (Probability Flow ODE) that diffusion models follow: providing ways to encode/decode images without any stochasticity. This is favorable for editing real images through diffusion models: The only way to manipulate real images are to first encode them into latents that will result in the image itself after solving the PF-ODE.

However, there’s a caveat. Image editing methods rely on the use of Classifier-Free Guidance (CFG), which results in the reconstruction PF-ODE to diverge from its original trajectory. Past inversion methods try to overcome this by optimizing each encoded latent in the PF-ODE trajectory, which is extremely slow, even for diffusion models.

\[\begin{gathered} \tilde{\epsilon_\theta}\left(\boldsymbol{z}_t^{N M}, c_T\right)=\epsilon_\theta\left(\boldsymbol{z}_t^{N M}, \emptyset\right)+s_T \cdot\left(\epsilon_\theta\left(\boldsymbol{z}_t^{N M}, c_T\right)-\epsilon_\theta\left(\boldsymbol{z}_t^{N M}, \emptyset\right)\right) \\ \boldsymbol{z}_{t-1}=\sqrt{\frac{\alpha_{t-1}}{\alpha_t}} \boldsymbol{z}_t^{N M}+\sqrt{\alpha_{t-1}}\left(\sqrt{\frac{1}{\alpha_{t-1}}-1}-\sqrt{\frac{1}{\alpha_t}-1}\right) \tilde{\epsilon_\theta}\left(\boldsymbol{z}_t^{N M}, c_T\right) \end{gathered}\]To overcome the slow process of optimizing each latent, we leverage the noise maps, intermediate latent representations of the PF-ODE from the timestep before in re-traversing the reconstruction PF-ODE path. As mentioned, this allows optimization-free inversion of real images, while also being robust to the structure of the original image.

We found that using our proposed method Noise Map Guidance (NMG), existing real image editing methods were drastically improved while being 20 times faster!

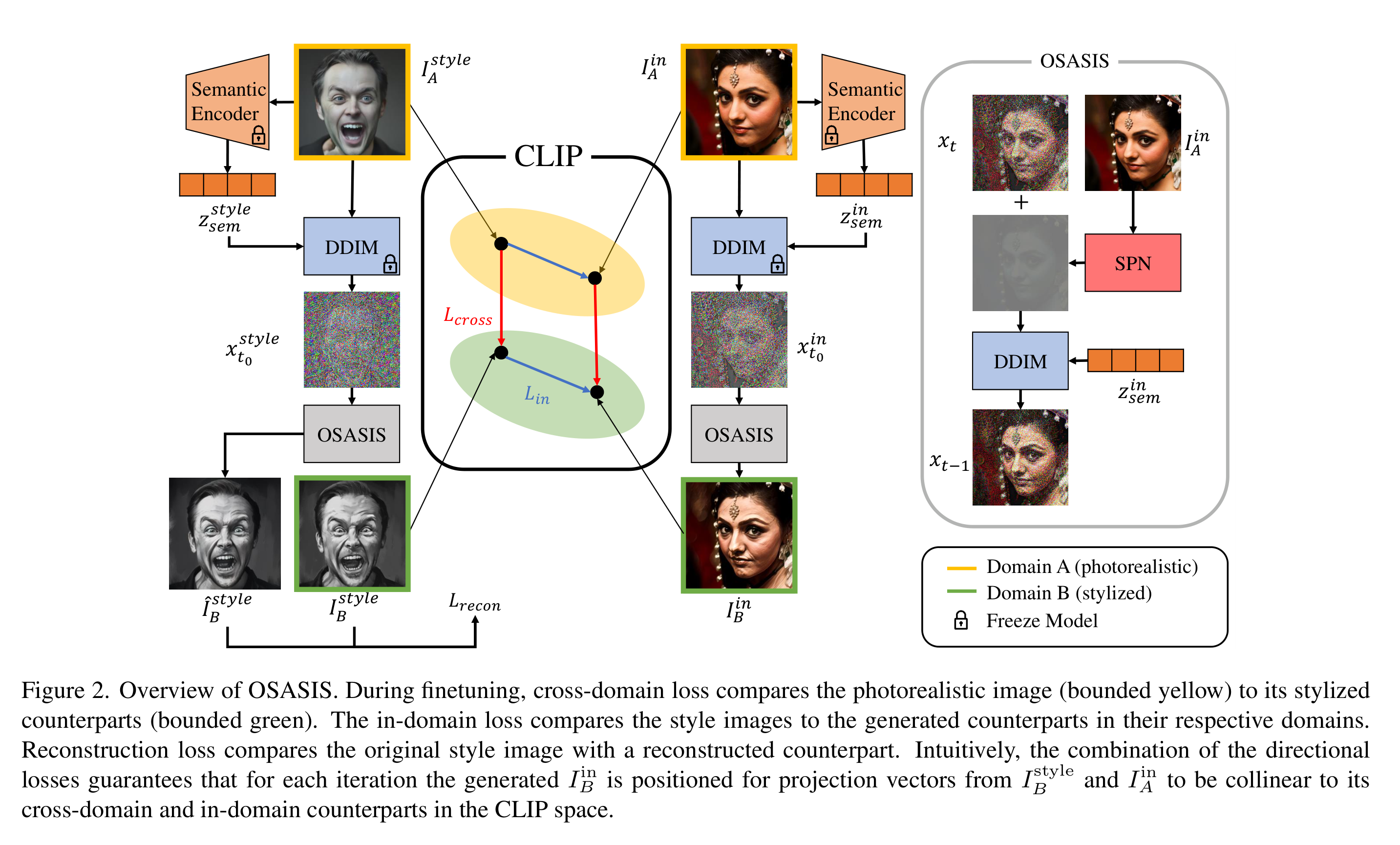

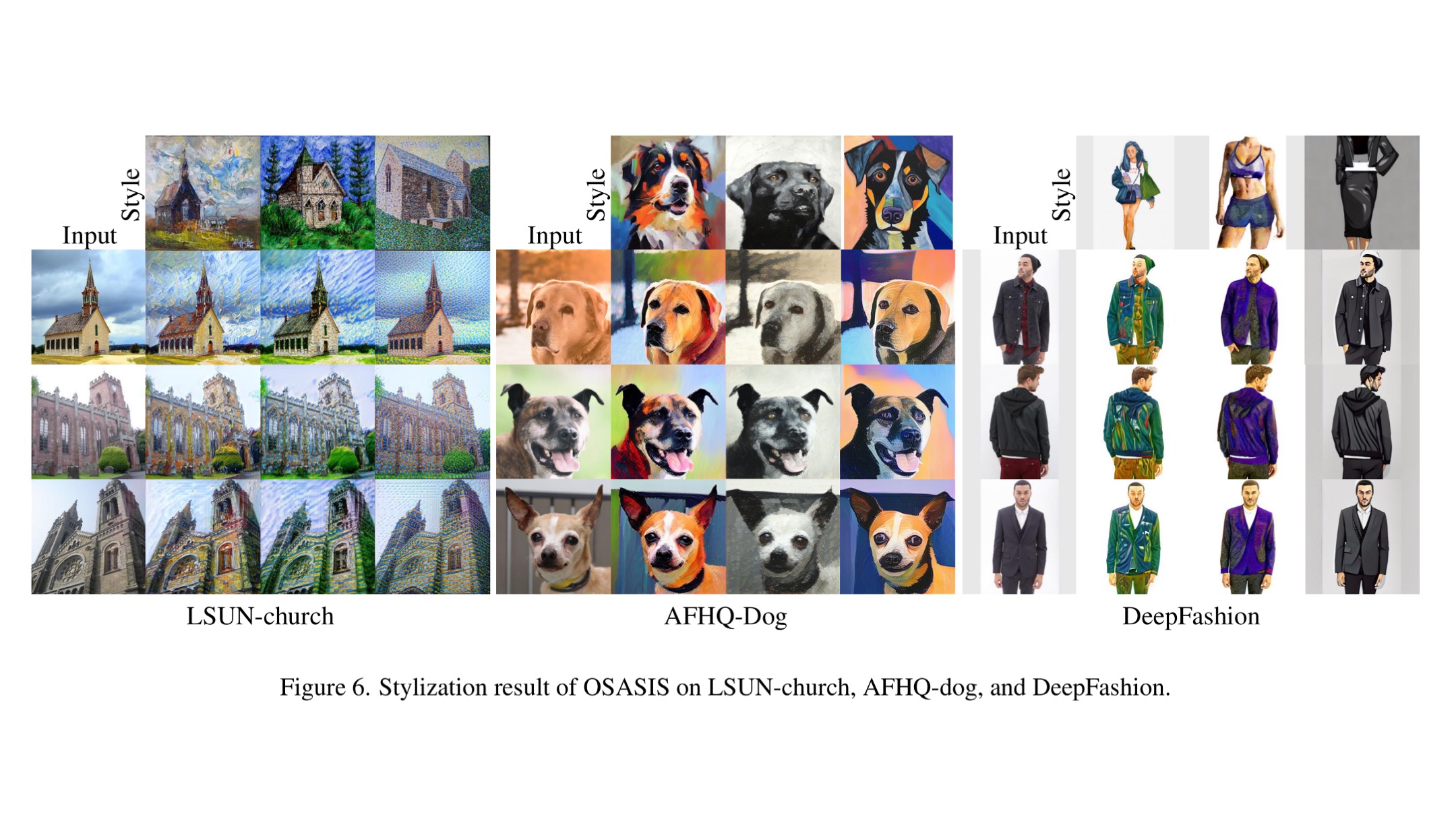

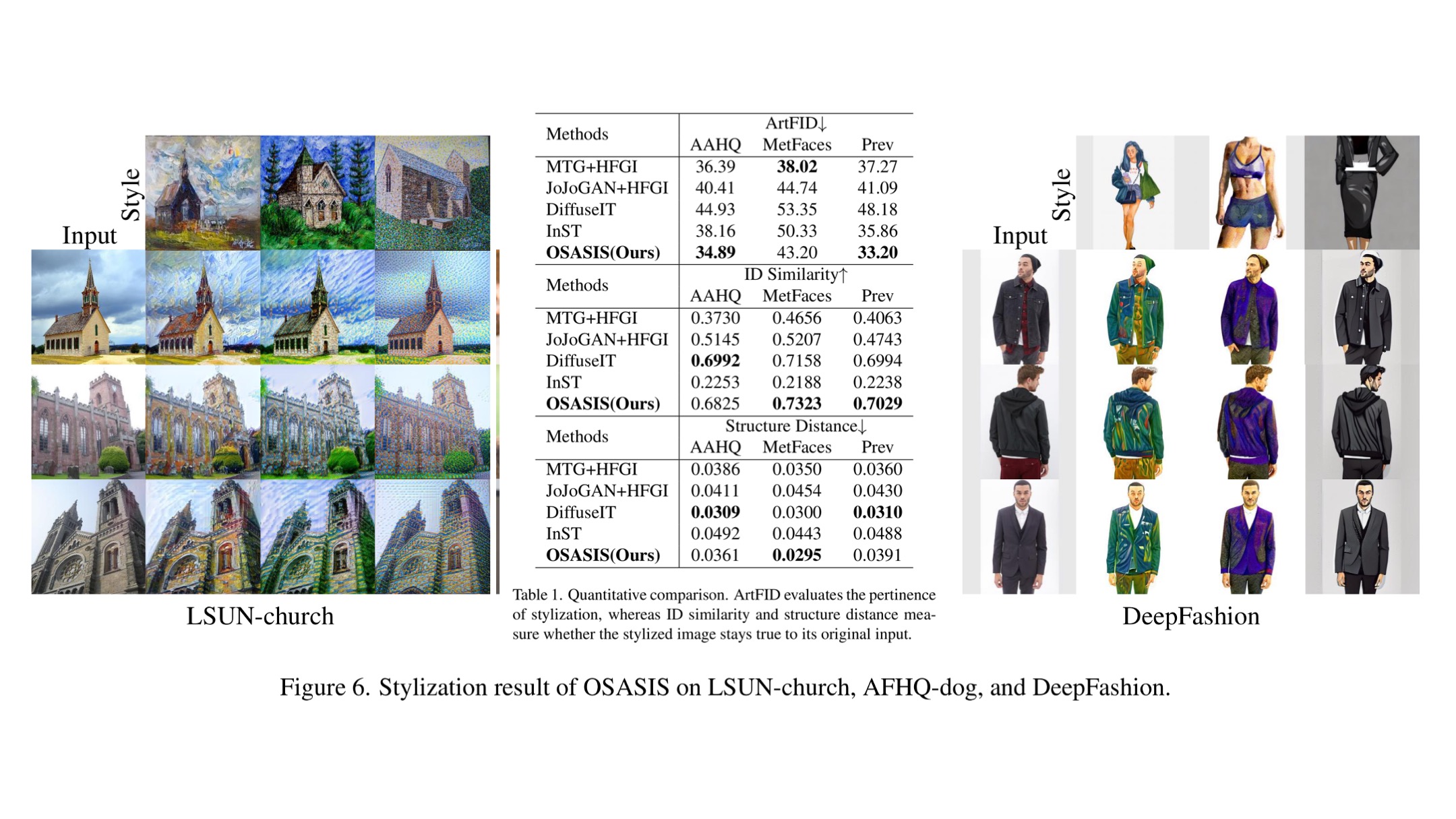

3. [CVPR 2024] One-Shot Structure-Aware Stylized Image Synthesis

Second Author, accepted to CVPR 2024 - 2024.02.27

Diffusion models are considered very attractive in the domain of stylization due to its potency and expressiveness. While GAN based stylization methods have been thoroughly explored, stylization via diffusion models are relatively under explored. GAN based stylization methods often fail in preserving rarely-seen attributes in images, and Diffusion based stylization methods require many style images in order to perform stylization (Multi-shot).

To this end, we’ve proposed One-Shot Structure-Aware Stylized Image Synthesis (OSASIS), a diffusion model capable of disentangling the structure and semantics of an image, and stylizing real images with just a single reference style. OSASIS leverages the CLIP space, and we train the model by making sure the CLIP space between four images stay parallel, respective to its counterpart. We also formulate a Structure Preserving Network (SPN) that ensures the stylized output retains the structure of the original input image.

Designed to be a training dataset-free one-shot model (only requiring a single reference image), OSASIS is robust in preserving the structure of rarely-seen attributes of a dataset!

Paper link [Code coming soon!]

Projects

1. AI Spark Challenge - IRDIS (Immediate Rescue Based Disaster Response System)

Ministry of Science and ICT lead AI Tournament, Final Selection - 2022.04

For the hackathon, I lead team IRDIS (Immediate Rescue Based Disaster Response System), where we were tasked to develop a disaster response solution.

We decided to utilize the xView2 Dataset, containing 22K satellite images. Each image has a pre/post disaster counterpart, and labels containing information about what disaster had occured, the level of damage, and segmentation labels for roads and buildings.

We first trained two seperate UNet models on the xView2 Dataset on the task of semantic segmentation, each detecting the segmentation maps for buildings and roads. Then, we trained a third model conditioned on the type of building and segmentation map to predict the damage level for a building.

We then created a Scoring/Ranking system based off the Analytical Hierarchy Process (AHP), which ranked which building was in need of immediate rescue. The criterions of the AHP took in the type of building, how many buildings were in proximity, how badly damaged the buildings were, the time of day, and what kind of road lead up to said building.

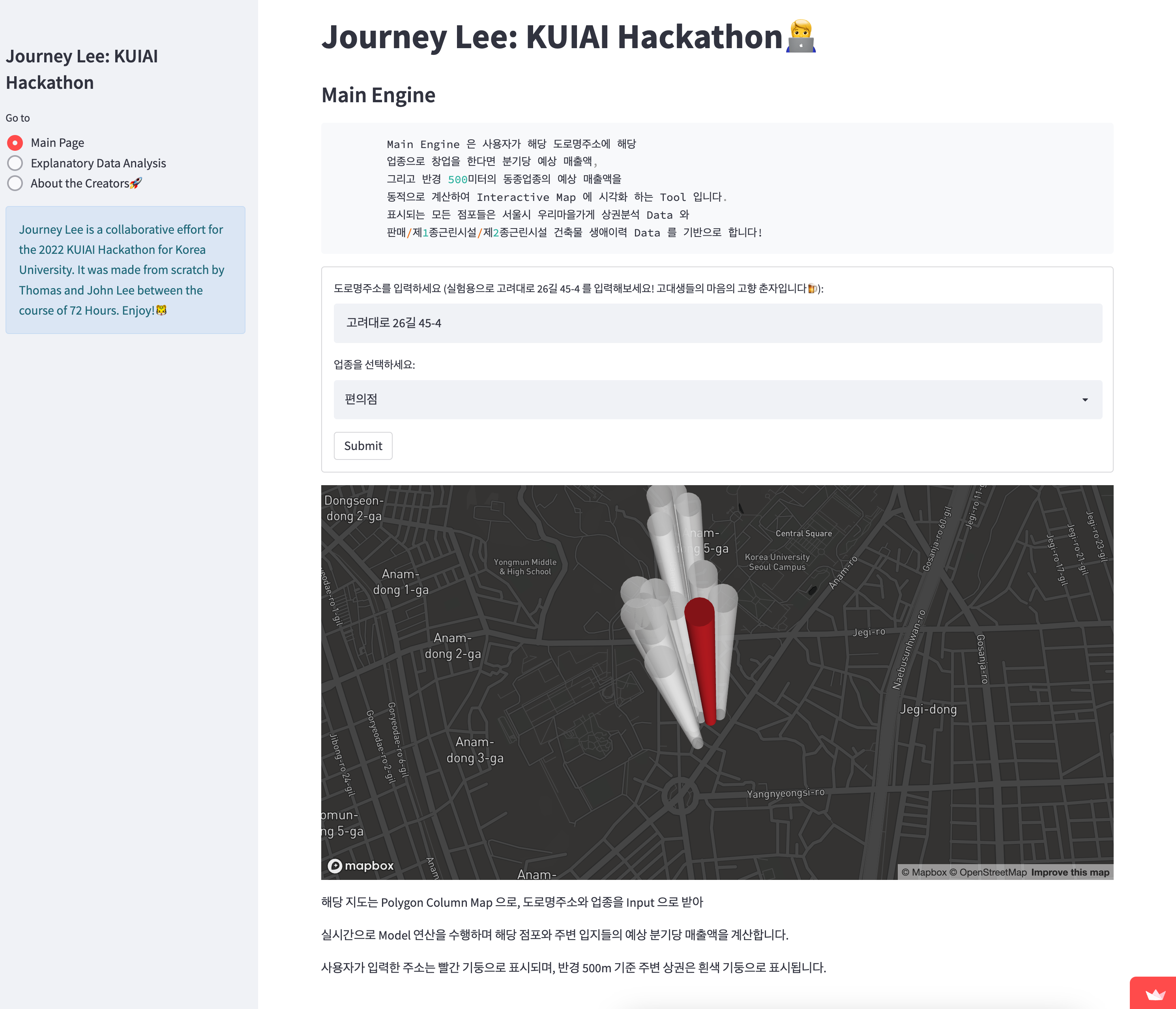

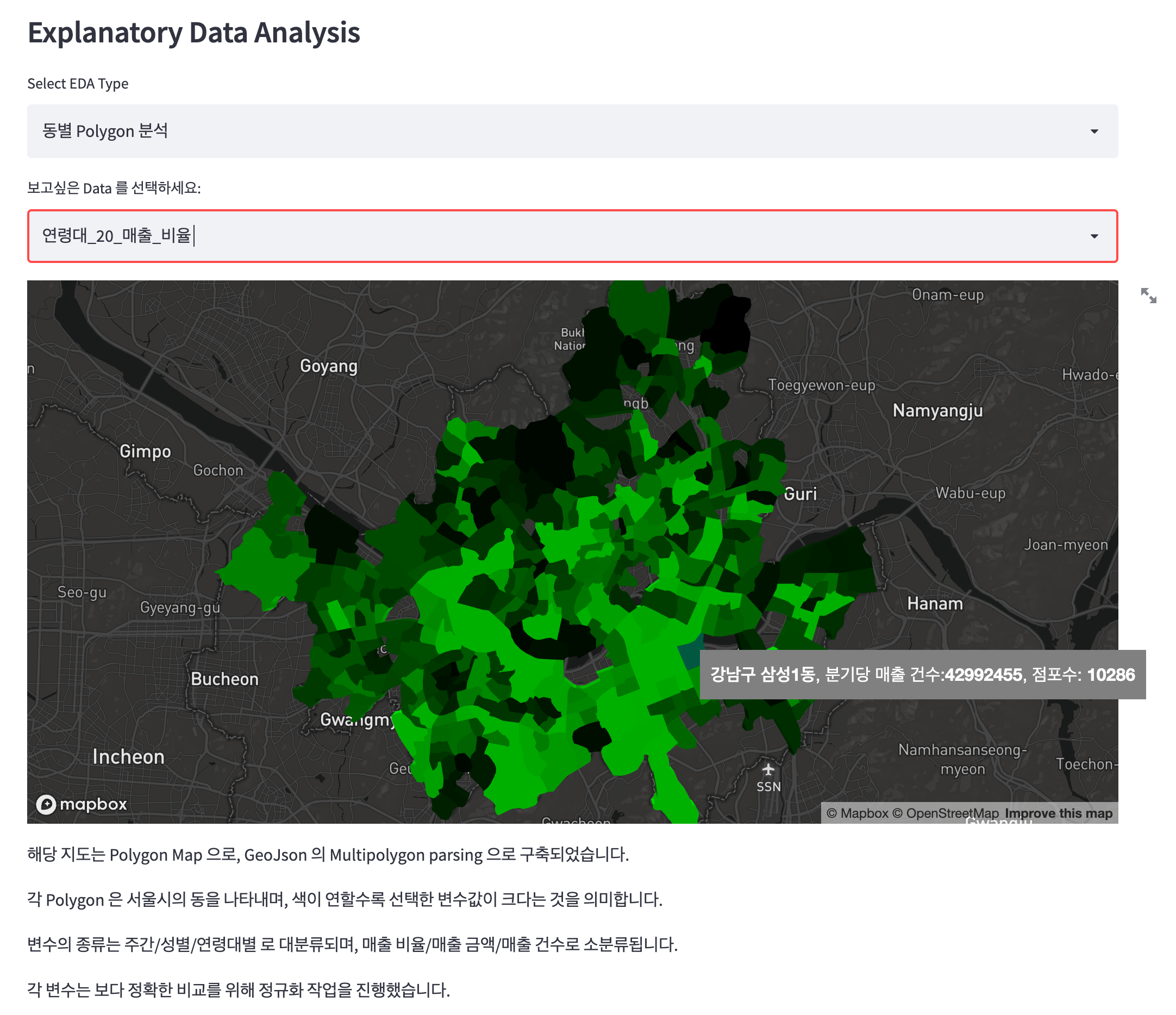

2. KUIAI Hackathon - Multi-polygon map based business location recommendation system

Korea Electronics Technology Institute (KETI) lead AI Hackathon, 3rd place/20 teams - 2022.01

For the hackathon, I lead team Journey Lee, where we were tasked to deduct meaningful data collected from commercial stores/buildings across Seoul, South Korea in 3 days. We decided to build a location recommendation system for the commercial success of businesses.

We trained a simple model with 8 FC layers, tasked to predict the quarterly sales of a business based on multiple variables, including, but not limited to: geographic coordinates, type of business, sales per day of the week, sales per age group. We then created an online tool that would take in the type of business and geographic coordinate, and output the predicted monthly sale of said location and any other viable address within a 500 meter radius.

On the online tool, we’ve also included a GeoPandas based EDA tool, where data about the various variables used to train the model are visualized.

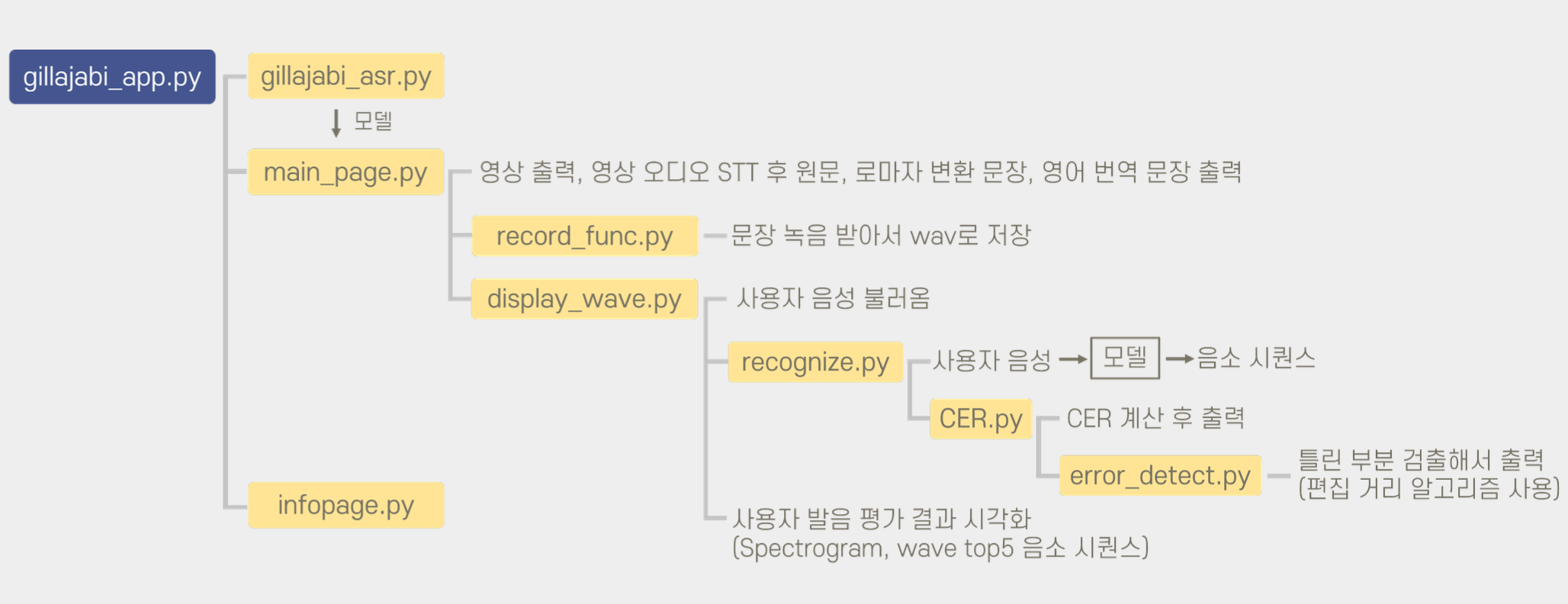

3. K-Data Datacampus - Audio-Image Korean phoneme recognition model

Korea Data Agency lead AI Tournament, 2nd place/40 teams - 2021.09

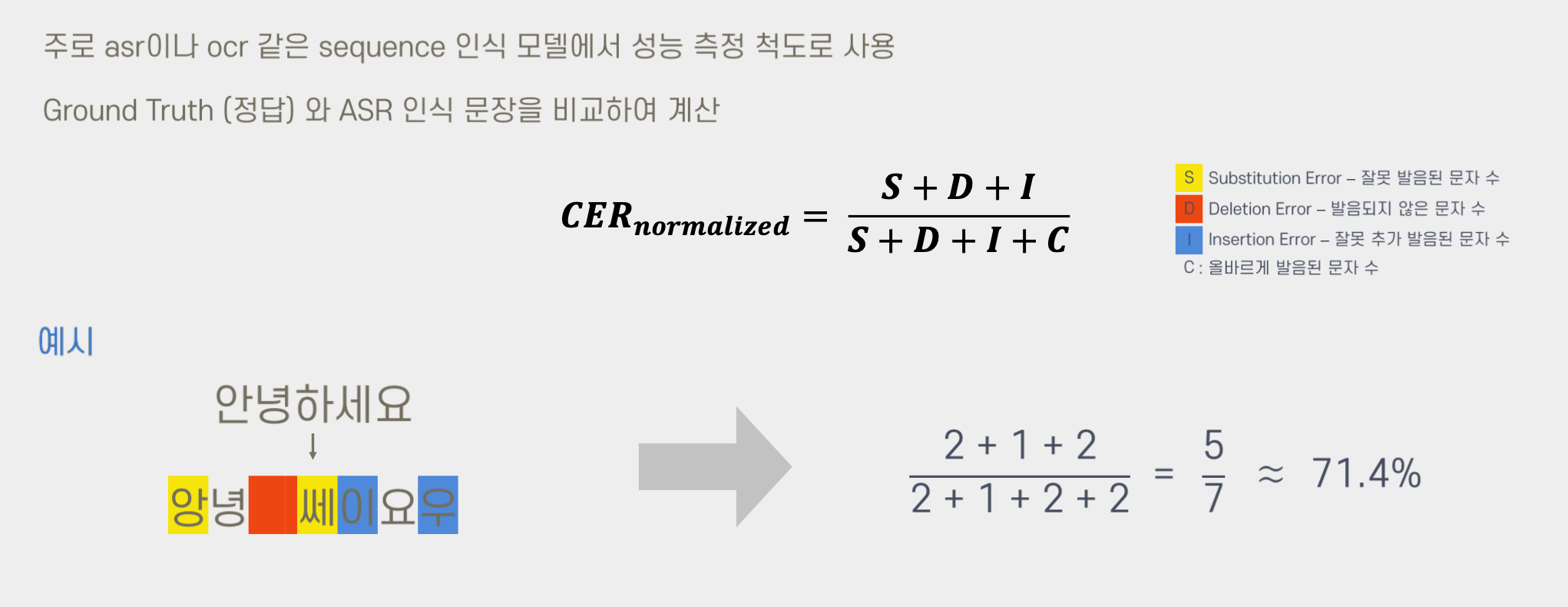

For the tournament, I lead team Gillajab-i, where we first developed and trained a transformer based ASR Korean phoneme recognition model, and serviced a web-app for Korean word pronunciation error, measured by a Levenshtein distance based character-error rate (CER) metric.

We collected 1000 hours of raw Korean speaking data, re-tokenized the ground truth speech to phonemes, and trained a ViT. The model took in audio, converted it to Mel-Spectrograms, and predicted the phonemes (instead of words) from said audio clip, reaching a test accuracy of 93%.

Targeted at foreigners learning to speak korean, the web-app would follow these steps:

- A user would choose or upload a short video of someone saying the phrase they would want to learn

- Audio is extracted from the video

- Model converts the audio into Korean text

- Converts the Korean text into Romaji (for the user to read)

- Converts the Korean text into English (for the user to understand)

The user would record themselves practicing the pronunciation, and our ViT would inference the phonemes from the speech, and calculate the error rate via Levenshtein distance.

4. Smart Campus Datathon - Unsupervised embedding model based scholarship parsing/recommendation system

Korea University Datahub lead AI Datathon, 2nd place/15 teams - 2021.09

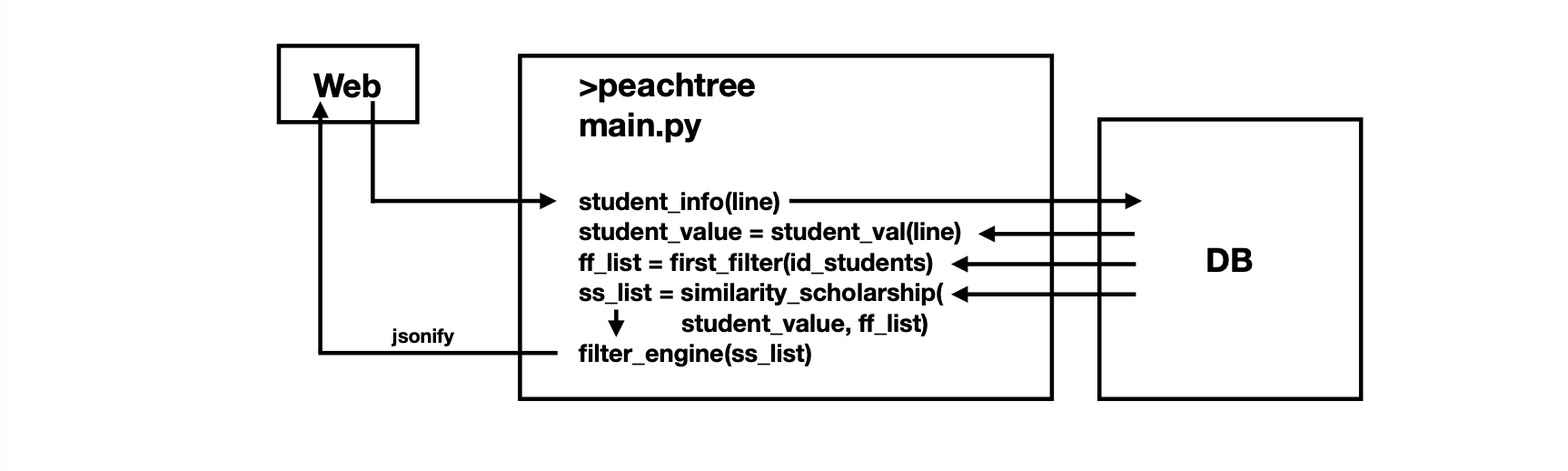

For the datathon, I lead team Peachtree, where we we developed a web-app with an embedded unsupervised embedding model that parsed and recommended scholarships based off a user’s age, major, GPA, home address, previous scholarship grants, and scholarship eligibility.

We first crawled data from major scholarship hosting platforms targeted to undergraduate students, and preprocessed the unstructured data. We then used Doc2Vec as an embedding model to embed all the scholarships, and used DBSCAN to cluster said scholarships. For the actual recommendation of scholarships, ‘anchor’ students were matched with a cluster based on their previous scholarship grants, and the cosine similarity between an inference student and all the ‘anchor’ students were calculated to place the inference student in a cluster. A final rule based algorithm filters out scholarships that aren’t eligible, and students were recommended scholarships that were left in the cluster.